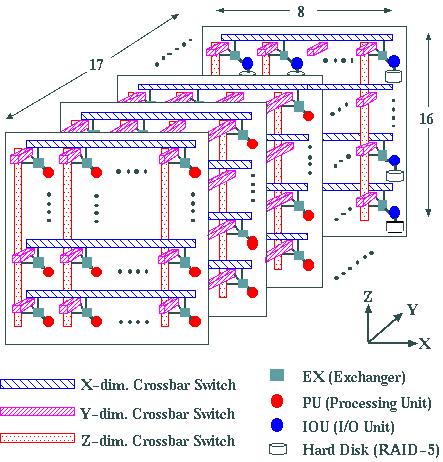

The CP-PACS is an MIMD (Multiple Instruction-streams Multiple Data-streams) parallel computer with a theoretical peak speed of 614Gflops and a distributed memory of 128Gbyte. The system consists of 2048 processing units (PU's) for parallel floating point processing and 128 I/O units (IOU's) for distributed input/output processing. These units are connected in an 8x17x16 three-dimensional array by a Hyper Crossbar network. A well-balanced performance of CPU, network and I/O devices supports the high capability of CP-PACS for massively parallel processing.

Each PU of the CP-PACS has a custom-made superscalar RISC processor with

an architecture based on PA-RISC 1.1. In large scale computations in scientific

and engineering applications on a RISC processor, the performance degradation

occuring when the data size exceeds the cache memory capacity is a serious problem.

For the processor of CP-PACS, an enhancement of the architecture called the PVP-SW

(Pseudo Vector Processor based on Slide Window)has been developed to

resolve this problem, while still maintaining upward compatibility with

the PA-RISC architecture.

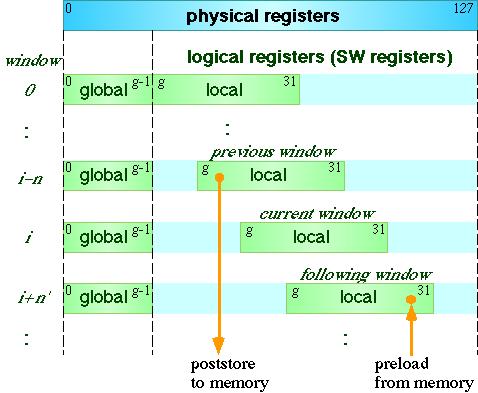

The PVP-SW architecture has two elements. The Slide Window

Mechanism allows the use of a large number of physical registers through

a continuously sliding logical register window of 32 registers along the

physical registers. The Preload and Poststore

instructions, which can be issued without waiting for the completion

of memory access, allow a pipelined access to main memory, and thus a long

latency for memory access can be tolerated. With these features, an

efficient vector processing is realized in spite of the superscalar

architecture of the CP-PACS processor.

The Hyper Crossbar network of CP-PACS is made of crossbar

switches in the x, y, and z directions, connected together by an

Exchanger at each of the three-dimensional crossing points of the

crossbar array. Each exchanger is connected to a PU or IOU. Thus

any pattern of data transfer can be performed with the use of at most

three crossbar switches.

Since the network has a huge switching capacity

due to the large number of crossbar switches, the sustained data transfer

throughput in general applications is very high.

Data transfer on the network is made through Remote DMA (Remote

Direct Memory Access), in which processors exchange data

directly between their respective user

memories

with a minimum of intervention from

the operating system. This leads to a significant reduction in the startup

latency, and a high throughput.

The network allows a hardware bisection of PU arrays in each of

the x, y and z directions.

Hence the full system can be

divided up to 8 independent subsystems.

Hyper Crossbar network and distributed disk system

The distributed disk system of CP-PACS is connected to 128 IOU's on the 8x16 plane at the end of the y direction of the Hyper Crossbar network by a SCSI-II bus. RAID-5 disks are used for fault tolerance. The IOU's handle parallel file I/O requests issued by the PU's in an efficient and distributed way using Remote DMA through the Hyper Crossbar network.

The HIPPI connection to the front host is attached to one of the IOU's. A

special FTP protocol has been developed for a high speed file transfer

between the distributed disk system of CP-PACS and the disk storage of

the front host.